Smarter Integration with Smart Connections

Adeptia makes it easy to connect with any data format and protocol.

Welcome to the Future of Data Integration

Adeptia Automate acts as a central hub for organizations to manage their data by streamlining data integration across various applications and systems. Build powerful data automations in an easy-to-use, no-code platform.

API

With Adeptia Automate, APIs act as intermediaries, allowing companies to expose specific data points and functionalities to authorized partners. Adeptia not only supports calling and consuming APIs, but also publishing automations as APIs. This eliminates reliance on manual processes like data entry or file transfers, reducing errors and inefficiencies.

EDI

Ditch the manual work associated with traditional Electronic Data Interchange (EDI) integration software. Adeptia offers a modern, next-generation EDI automation solution that leverages AI to improve data quality, minimize errors and provide real-time visibility and control across the EDI lifecycle. Update and modernize your legacy EDI with real-time connectivity, immediate responses to partners and combine EDI with APIs for a seamless experience.

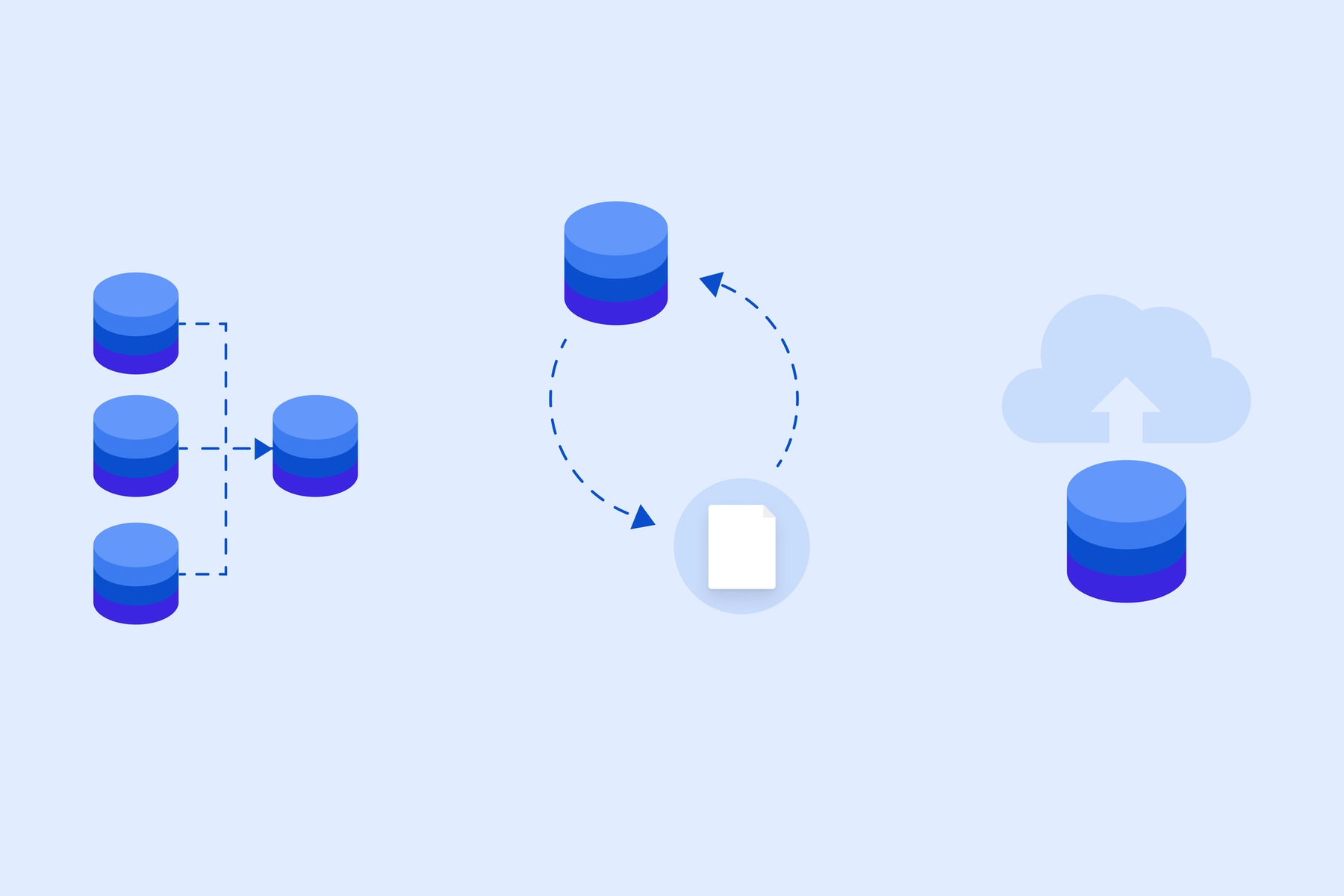

ETL/ELT

In addition to traditional ETL (extract, transform, load), Adeptia provides the ability to perform transformations on the staged data before loading it into the destination, a process known as ELT (extract, load, transform). By separating loading from transformation, ELT can handle growing data volumes more efficiently. Eliminate the need for manual data mapping, transformation and validation rules when setting up ETL pipelines with Adeptia’s powerful AI.

iPaaS

Connect your applications for seamless data exchange between systems using a collaborative business application that enables both IT and less-technical users. Adeptia has pre-built connectors to hundreds of applications, enabling you to set up integrations in minutes.

Files

Adeptia uses advanced AI to turn structured and unstructured data from files into your preferred format, validate the data and then deliver it to your applications and systems. Integrate structured files as well as unstructured invoices, purchase orders, and other documents with your enterprise systems.

See Adeptia in Action

Schedule a personalized demo and discover how Adeptia can help you move faster, work smarter, and scale with confidence.

Resources

Related Resources

5 Data Problems That Derail Carrier Transitions (And What to Do About Them)

Carrier transitions are supposed to be a fresh start. New rates, better service, improved benefits. What they actually tend to be is a stress test on your data, your systems, your timelines, and every...

The Hidden Cost of Delayed Asset Onboarding in Retirement Benefit Services

Delayed retirement plan onboarding costs providers $40K+ monthly in deferred revenue. Learn how Intelligent Data Automation cuts transition times by 80%....

The Enterprise Automation Shift: AI Agents, First-Mile Data, and the Future of Integration

AI agents, first-mile data, and intelligent integration will reshape enterprise automation in 2026—driving AI-ready systems across insurance, finance, and SaaS....